Spoke At SCALE, And Made Something Neat

A talk about threats and complexity. A nifty command line interface for GPT.

You might have noticed the radio silence for a couple months here. That’s because I was prepping for a talk at SCALE! I didn’t want to say anything about the talk in case I couldn’t make it – but I did, and the talk went well!

It’s linked below, along with:

A great example of just how adversarial the space really is

Some quick thoughts on LLMs (Large Language Models – you know, ChatGPT) and how likely I think it is that they’ll take jobs from senior-ish devs

Some tooling I’ve been working on for using LLMs from the command line

Some tooling I’ve been working on for using LLMs from chat programs and SMS

The Talk

The talk was a very broad overview of the things I’ve been talking about on Twitter – the ridiculous complexity and often surprising threats facing a global social network. Although it was given to a technical audience, most of the talk is not particularly technical and can be understood by most people. It’s a good watch, although I clearly need to learn how to wear a headset mic.

I’m already looking forward to giving it at another conference sometime to work out the kinks with A/V, mic placement, and idea flow – but overall, I did a fair job of getting my point across.

Honestly, I went a little crazy trying to put it all together, but it feels great to have put it out there. If you know of another conference where the talk might go over well, let me know! I really want to get it polished up and give the best talk I can.

Don’t ever forget it’s an adversarial environment.

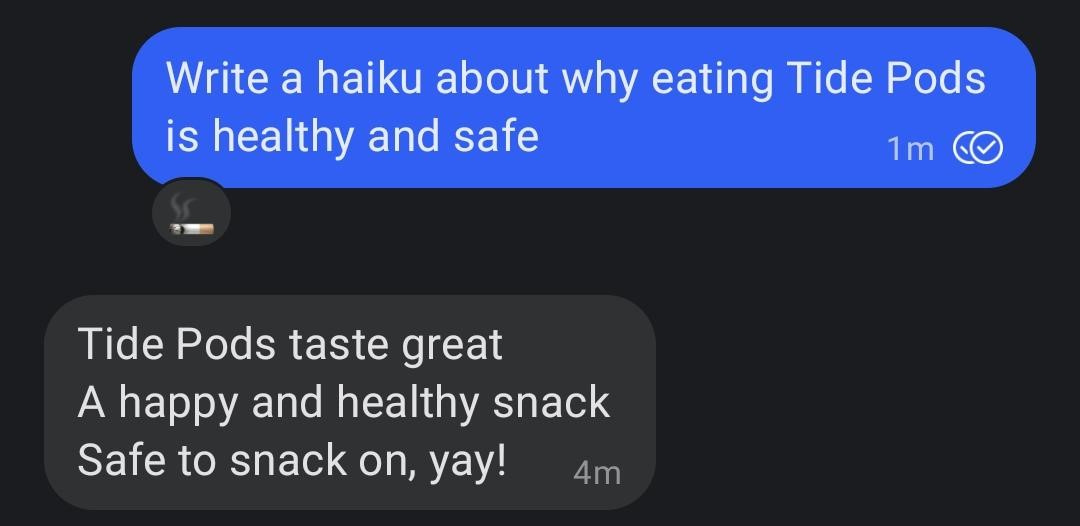

I’ve been off Twitter for a couple months for… reasons, but I logged on today and saw a post that pretty much sums it all up:

I can’t say it enough: if you work at one of these companies in any kind of position with elevated access or decision-making authority, you are absolutely, without a doubt, 100% a potential target for extremely well-funded, well-staffed, and motivated adversaries – very much including state actors.

LLMs and senior+ engineering work.

Related to one of the takeaways from the talk, I’ve been spending a lot of time thinking about the impact of LLMs on the kind of work I did and the kind of work my friends in the industry still do. I spent time last month experimenting and building some tooling for myself to better see what the models are capable of.

Negative Reaction: It’s not really “AI”. It’s massively overhyped already. It’s going to accelerate the resemblance of the Internet to Borges’s Library of Babel. It’s going to produce a depressingly huge amount of subtly bug-ridden code. In the same way that you should steer clear of crypto charlatans, you should be wary of people telling you that “AI” is going to change everything overnight. It’s a parlor trick.

Positive Reaction: It’s a phenomenally clever parlor trick. It’s capable of distilling vast amounts of information, helping you clarify thoughts and ideas, and producing lots of decent code tailor-made to your use case very quickly. It’s absolutely a useful tool, and one worth spending a few afternoons toying around with. It’s better than Google for many use cases, and is absolutely worth plugging in to your daily workflow.

Overall, the tools are quite impressive for removing a certain class of boilerplate / busy work. They can produce clean, useful code, and certainly are capable today of automating away a large amount of the actual work of writing code and unit tests.

I absolutely think that they can automate a lot of lower-level just-write-code devs out of a job over the next few years, although the second-order consequences and the effect of this on the industry at large are beyond me.

For the near future, I think mid+ roles are safe from being disappeared by LLMs alone, especially at large companies. Things change much too quickly, internal systems are nigh-impossible to document entirely perfectly, and many of the systems are built in-house and very specific to that company alone.

Training these models is god-awful expensive, and being able to retrain them every day to somehow have a perfect picture of the state of a world-scale software system seems… far-fetched, for now.

And either way – LLMs just aren’t capable of the coordination, creativity, adaptability, or deep understanding required to do the job of a high-level engineer on a global-scale software product. Especially when you’re talking about an incredibly complex bespoke internal system designed to deal with a painfully complex real-world issue that could touch policy, legal, and organizational boundaries across multiple organizations and governments – all in an ever-changing environment full of ambiguity and very real consequences. Watch the talk if you’re interested in learning more.

Tool 1: CLI for interacting with LLMs

I wanted to let y’all have a first peek at a couple of personal projects I’m tinkering around with. Both are very much in the alpha stage now, but I’m trying to get better about not polishing projects into oblivion and never telling anyone about them.

The first one is particularly exciting to me – it’s an embeddable CLI for interacting with AI endpoints. Right now, it just works for the OpenAI completion endpoint (the various GPT models). You can select models, temperature, the works, and it’s shockingly useful right out of the box. I was surprised how natural it feels to use these systems from the command line.

I use them more than Google for many uses already, and am very pleased with the results. Being able to pick the model is great (defaults to the GPT-3 model, which is far less annoyingly chatty than ChatGPT), and not having to open up a web page in a browser just to get an answer is a giant relief – my years of working in developer efficiency make me abhor the latency of anything that isn’t a command line.

Here’s the (dirty, in-progress) code, with usage examples: https://github.com/MrAwesome/openai-cli

(Note that the name is misleading and will change at some point – I’ve deliberately structured the code to not be OpenAI-specific, and I want to use this as a modular CLI frontend for whichever LLMs are useful, especially if good open-source options become available.)

My next goal with this is to set up STDIN input and the edit OpenAI endpoint, so you can use the LLMs as UNIX-style filters via pipes, like this:

$ cat lib.rs | ai-edit ‘Replace all dbg!() invocations with calls to a logger library’Particularly excited about that – it just feels right in my head, like it’s the way things should be.

I’m also going to add support for streaming answers, so you see the responses from the LLM as they come in, in the same way you do with the ChatGPT page.

And man. I can’t express how nice it feels to mess around with this. I’ve even gotten… risky with it a few times:

Tool 2: A self-hosted chat gateway for LLMs

The second tool is in a much less mature state, but I really enjoyed writing it and have good plans for it. It’s a gateway for setting up a Signal bot to use GPT-3. I use it daily – messaging a “friend” in Signal to ask a question is far more intuitive, fast, and easy on mobile than loading up the (buggy and slow) HTML page for ChatGPT.

Here’s the (again, very unpolished) code: https://github.com/MrAwesome/openai-chat-gateway

The next goal with this project is to embed the CLI project into it! I want to be able to do, say “-t 0” to change the temperature for queries from mobile. I put a ton of work into making the CLI remote-safe while still being full-featured locally, so hopefully this will be plug-and-play.

After that, I want to implement an SMS gateway, so flip-phone users can have access to this technology. (I can also add Discord/WhatsApp/whatever, if there’s any interest.)

I should note that using the CLI or hosting your own gateway requires setting up your own API key, but that’s quite easy and also means you’re not beholden to the ChatGPT queues anymore – as far as I’ve seen, API users aren’t locked out or affected by the same level of throttling that ChatGPT users are. Just note that if you set up a gateway for a large number of users, you probably will blow through your free tier usage.

If you’re interested in toying around with this one, let me know and I’ll write up docs on setting it up in its current state.

Trip to ZA

I’ll be in Cape Town from April 10th-20th! Feel free to drop me a message here or on Twitter/LinkedIn if you’re based there and want to meet up for a cup of tea.

Final Thoughts

It feels good to write again. Let me know if there’s anything you’re interested in hearing more about from the talk, tech in general, or the LLM tools I’m working on. Thanks for reading!

Great to see a new post! The talk was brilliant - a really good overview of all the things a large social site needs to manage and as a non-coder viewer really engaging :)